The concept of recursion is fundamental in mathematics and computer science. The simple definition is that a recursive program in a programming language is one that calls itself (just as a recursive function in mathematics is one that is defined in terms of itself). A recursive program cannot call itself always, or it would never stop (just as a recursive function cannot be defined in terms of itself always, or the definition would be circular); so a second essential ingredient is that there must be a termination condition when the program can cease to call itself (and when the mathematical function is not defined in terms of itself). All practical computations can be couched in a recursive framework. The study of recursion is intertwined with the study of recursively defined structures known as trees. We use trees both to help us understand and analyze recursive programs and as explicit data structures.

The connection between recursive programs and trees underlies a great deal of the material in algorithm analysis. We use trees to understand recursive programs; we use recursive programs to build trees; and we draw on the fundamental relationship between both (and recurrence relations) to analyze algorithms. Recursion helps us to develop elegant and efficient data structures and algorithms for all manner of applications.

Our primary purpose in this series is to examine recursive programs and data structures as practical tools. First, we discuss the relationship between mathematical recurrences and simple recursive programs, and we consider a number of examples of practical recursive programs. Next, we examine the fundamental recursive scheme known as divide and conquer, which we use to solve fundamental problems in several later sections. Then, we consider a general approach to implementing recursive programs known as dynamic programming, which provides effective and elegant solutions to a wide class of problems. Next, we consider trees, their mathematical properties, and associated algorithms in detail, including basic methods for tree traversal that underlie recursive tree-processing programs. Finally, we consider closely related algorithms for processing graphs—we look specifically at a fundamental recursive program, depth-first search, that serves as the basis for many graph-processing algorithms.

As we shall see, many interesting algorithms are simply expressed with recursive programs, and many algorithms designers prefer to express methods recursively. We also investigate nonrecursive alternatives in detail. Not only can we often devise simple stack-based algorithms that are essentially equivalent to recursive algorithms, but also we can often find nonrecursive alternatives that achieve the same final result through a different sequence of computations. The recursive formulation provides a structure within which we can seek more efficient alternatives.

1、Recursive Algorithms

A recursive algorithm is one that solves a problem by solving one or more smaller instances of the same problem. To implement recursive algorithm in C, we use recursive functions—a recursive function is one that calls itself. Recursive functions in C correspond to recursive definitions of mathematical functions. We begin our study of recursion by examining programs that directly evaluate mathematical functions. The basic mechanisms extend to provide a general-purpose programming paradigm, as we shall see.

Recurrence relations (see section Basic Recurrences in Principles of Algorithm Analysis) are recursively defined functions. A recurrence relation defines a function whose domain is the nonnegative integers either by some initial values or (recursively) in terms of its own values on smaller integers. Perhaps the most familiar such function is the factorial function, which is defined by the recurrence relation![]() This definition corresponds directly to the recursive C function in Program 1.

This definition corresponds directly to the recursive C function in Program 1.

Program 1 Factorial function (recursive implementation)

This recursive function computes the function N!, using the standard recursive definition. It returns the correct value when called with N nonnegative and sufficiently small that N! can be represented as an int.

![]()

Program 1 is equivalent to a simple loop. For example, the following for loop performs the same computation:![]()

As we shall see, it is always possible to transform a recursive program into a nonrecursive one that performs the same computation. Conversely, we can express without loops any computation that involves loops, using recursion, as well.

We use recursion because it often allows us to express complex algorithms in a compact form, without sacrificing efficiency. For example, the recursive implementation of the factorial function obviates the need for local variables. The cost of the recursive implementation is borne by the mechanisms in the programming systems that support function calls, which use the equivalent of a built-in pushdown stack. Most modern programming systems have carefully engineered mechanism for this task. Despite this advantage, as we shall see, it is all too easy to write a simple recursive function that is extremely inefficient, and we need to exercise care to avoid being burdened with intractable implementations.

Program 1 illustrates the basic features of a recursive program: it calls itself (with a smaller value of its argument), and it has a termination condition in which it directly computes its result. We can use mathematical induction to convince ourselves that the program works as intended:

- It computes 0! (basis).

- Under the assumption that it computes k! for k < N (inductive hypothesis), it computes N!.

Reasoning like this can provide us with a quick path to developing algorithms that solve complex problems, as we shall see. In a programming language such as C, there are few restrictions on the kinds of programs with we write, but we strive to limit ourselves in our use of recursive functions to those that embody inductive proofs of correctness like the one outlined in the previous paragraph. Although we do not consider formal correctness proofs in this series, we are interested in putting together complicated programs for difficult tasks, and we need to have some assurance that the tasks will be solved properly. Mechanisms such as recursive functions can provide such assurances while giving us compact implementations. Practically speaking, the connection to mathematical induction tells us that we should ensure that our recursive functions satisfy two basic properties:

- They must explicitly solve a basis case.

- Each recursive call must involve smaller values of the arguments.

These points are vague—they amount to saying that we should have a valid inductive proof for each recursive function that we write. Still, they provide useful guidance as we develop implementations. Program 2 is an amusing example that illustrates the need for an inductive argument. It is a recursive function that violates the rule that each recursive call must involve smaller value of the arguments, so we cannot use mathematical induction to understand it. Indeed, it is not known whether or not this computation terminates for every N, if there are no bounds on the size of N. For small integers that can be represented as ints, we can check that the program terminates (see Figure 1) , but for large integers (64-bit words, say), we do not know whether or not this program goes into an infinite loop.

Program 2 A questionable recursive program

If the argument N is odd, this function calls itself with 3N + 1 as an argument; if N is even, it calls itself with N/2 as an argument. We cannot use induction to prove that this program terminates, because not every recursive call uses an argument smaller than the one given.

Figure 1 Example of a recursive call chain

This nested sequence of function calls eventually terminates, but we cannot prove that the recursive function in Program 2 does not have arbitrarily deep nesting for some argument. We prefer recursive programs that always invoke themselves with smaller arguments.

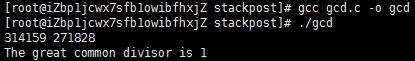

Program 3 is a compact implementation of Euclid’s algorithm for finding the greatest common divisor of two integers. It is based on the observation that the greatest common divisor of two integers x and y with x > y is the same as the greatest common divisor of y and x mod y (the remainder when x is divided by y). A number t divides both x and y if and only if t divides both y and x mod y, because x is equal to x mod y plus a multiple of y. The recursive calls made for an example invocation of this program are shown in Figure 2. For Euclid’s algorithm, the depth of the recursion depends on arithmetic properties of the arguments (it is known to be logarithmic)

Program 3 Euclid’s algorithm

One of the oldest-known algorithms, dating back over 2000 years, is this recursive method for finding the greatest common divisors of two integers.

Figure 2 Example of Euclid’s algorithm

This nested sequence of function calls illustrates the operation of Euclid’s algorithm in discovering that 314159 and 271828 are relatively prime.

Program 4 is an example with multiple recursive calls. It is another expression evaluator, performing essentially the same computations as Program 2 in Abstract Data Types~Stack ADT, but on prefix (rather than postfix) expressions, and letting recursion take the place of the explicit pushdown stack. Figure 3 shows the operation of Program 4 on a sample prefix expression. The multiple recursive calls mask a complex series of computations. Like most recursive programs, this program is best understood inductively: Assuming that it works properly for simple expressions, we can convince ourselves that it works properly for complex ones. This program is a simple example of a recursive descent parser—we can use the same process to convert C programs into machine code.

Program 4 Recursive program to evaluate prefix expressions

To evaluate a prefix expression, we either convert a number from ASCII to binary (in the while loop at the end), or perform the operation indicated by the first character in the expression on the two operands, evaluated recursively. This function is recursive, but it uses a global array containing the expression and an index to the current character in the expression. The pointer is advanced past each subexpression evaluated.

Figure 3 Prefix expression evaluation example

This nested sequence of function calls illustrates the operation of the recursive prefix-expression-evaluation algorithm on a sample expression. For simplicity, the expression arguments are shown here. The algorithm itself never explicitly decides the extent of its argument string: rather, it takes what it needs from the front of the string.

A precise inductive proof that Program 4 evaluates the expression properly is certainly much more challenging to write than are the proofs for functions with integer arguments that we have been discussing, and we shall encounter recursive programs and data structures that are even more complicated than this one throughout this series. Accordingly, we do not pursue the idealistic goal of providing complete inductive proofs of correctness for every recursive program that we write. In this case, the ability of the program to “know” how to separate the operands corresponding to a given operator seems mysterious at first (perhaps because we cannot immediately see how to do this separation at the top level), but is actually a straightforward calculation (because the path to pursue at each function call is unambiguously determined by the first character in the expression).

In principle, we can replace any for loop by an equivalent recursive program. Often, the recursive program is a more natural way to express the computation than the for loop, so we may as well take advantage of the mechanism provided by the programming system that supports recursion. There is one hidden cost, however, that we need to bear in mind. As is plain from the examples that we examined in Figure 1 through 3, when we execute a recursive program, we are nesting function calls, until we reach a point where we do not do a recursive call, and we return instead. In most programming environments, such nested function calls are implemented using the equivalent of built-in pushdown stacks. The depth of the recursion is the maximum degree of nesting of the function calls over the course of the computation. Generally, the depth will depend on the input. For example, the depths of the recursions for the examples depicted in Figure 2 and 3 are 9 and 4, respectively. When using a recursive program, we need to take into account that the programming environment has to maintain a pushdown stack of size proportional to the depth of the recursion. For huge problems, the space needed for this stack might prevent us from using a recursive solution.

Data structures built from nodes with pointers are inherently recursive. For example, our definition of linked lists in Elementary Data Structures ~ Linked Lists II (Definition 1) is recursive. Therefore, recursive programs provide natural implementations of many commonly used functions for manipulating such data structures. Program 5 comprises four examples. We use such implementations frequently throughout the series, primarily because they are so much easier to understand than are their nonrecursive counterparts. However, we must exercise caution in using programs such as those in Program 5 when processing huge lists, because the depth of the recursion for those functions can be proportional to the length of the lists, so the space required for the recursive stack might become prohibitive.

Program 5 Examples of recursive functions for linked lists

These recursive functions for simple list-processing tasks are easy to express, but may not be useful for huge lists because the depth of the recursion may be proportional to the length of the list.

The first function, count, counts the number of nodes on the list. The second, traverse, calls the function visit for each node on the list, from beginning to end. These two functions are both also easy to implement with a for or while loop. The third function, traverseR, does not have a simple iterative counterpart. It calls the function visit for every node on the list, but in reverse order.

The fourth function, delete, makes the structural changes needed for a given item to be deleted from a list. It returns a link to the (possibly altered) remainder of the list—the link returned is x, except when x->item is v, when the link returned is x->next (and the recursion stops).

Some programming environments automatically detect and eliminate tail recursion, when the last action of a function is a recursive call, because it is not strictly necessary to add to the depth of the recursion in such a case. This improvement would effectively transform the count, traversal, and deletion functions in Program 5 into loops, but it does not apply to the reverse-order traversal function.