An essential characteristic of the divide-and-conquer algorithms that we considered in Recursion and Trees~ Divide and Conquer is that they partition the problem into independent subproblems. When the subproblems are not independent, the situation is more complicated, primarily because direct recursive implementation of even the simplest algorithms of this type can require unthinkable amounts of time. In this article, we consider a systematic technique for avoiding this pitfall for an important class of problems.

For example, Program 1 is a direct recursive implementation of the recurrence that defines the Fibonacci numbers (see in Principles of Algorithm Analysis I). Do not use this program: It is spectacularly inefficient. ![]()

![]() The awful truth is that Program 1 is an exponential-time algorithm for this trivial computation. Figure 1, which depicts the recursive calls for a small example, makes plain the amount of recomputation that is involved.

The awful truth is that Program 1 is an exponential-time algorithm for this trivial computation. Figure 1, which depicts the recursive calls for a small example, makes plain the amount of recomputation that is involved.

Program 1 Fibonacci numbers (recursive implementation)

This program, although compact and elegant, is not usable because it takes exponential time to compute F(N). The running time to compute F(N+1) is Φ ≈ 1.6 times as long as the running time to compute F(N). For example, since ![]() if we notice that our computer takes about a second to compute F(N), we know that it will take more than a minute to compute F(N+9) and more than an hour to compute F(N+18).

if we notice that our computer takes about a second to compute F(N), we know that it will take more than a minute to compute F(N+9) and more than an hour to compute F(N+18). Figure 1 Structure of recursive algorithm for Fibonacci numbers

Figure 1 Structure of recursive algorithm for Fibonacci numbers

The picture of the recursive calls needed to used to compute F(8) by the standard recursive algorithm illustrates how recursion with overlapping subproblems can lead to exponential costs. In this case, the second recursive call ignores the computations done during the first, which results in massive recomputation because the effect multiplies recursively. The recursive calls to compute F(6) = 8 (which are reflected in the right subtree of the root and the left subtree of the left subtree of the root) are listed below.

By contrast, it is easy to compute F(N) in linear (proportional to N) time, by computing the first N Fibonacci numbers and storing them in an array: The numbers grow exponentially, so the array is small—for example, F(45) = 1836311903 is the largest Fibonacci number that can be represented as a 32-bit integer, so an array of size 46 will do. This technique gives us an immediate way to get numerical solutions for any recurrence relation. In the case of Fibonacci numbers, we can even dispense with the array, and keep track of just the previous two values; for many other commonly encountered recurrences, we need to maintain the array with all the known values.

The numbers grow exponentially, so the array is small—for example, F(45) = 1836311903 is the largest Fibonacci number that can be represented as a 32-bit integer, so an array of size 46 will do. This technique gives us an immediate way to get numerical solutions for any recurrence relation. In the case of Fibonacci numbers, we can even dispense with the array, and keep track of just the previous two values; for many other commonly encountered recurrences, we need to maintain the array with all the known values.

A recurrence is a recursive function with integer values. Our discussion in the previous paragraph leads to the conclusion that we can evaluate any such function by computing all the function values in order starting at the smallest, using previously computed values at each step to compute the current value. We refer to this technique as bottom-up dynamic programming. It applies to any recursive computation, provided that we can afford to save all the previously computed values. It is an algorithm-design technique that has been used successfully for a wide range of problems. We have to pay attention to a simple technique that can improve the running time of an algorithm from exponential to linear !!!

Top-down dynamic programming is an even simpler view of the technique that allows us to execute recursive functions at the same cost as (or less cost than) bottom-up dynamic programming, in an automatic way. We instrument the recursive program to save each value that it computes (as its final action), and to check the saved values to avoid recomputing any of them (as its first action). Program 2 is the mechanical transformation of Program 1 that reduces its running time to be linear via top-down dynamic programming. Figure 2 shows the drastic reduction in the number of recursive calls achieved by this simple automatic change. Top-down dynamic programming is also sometimes called memoization.

Program 2 Fibonacci numbers (dynamic programming)

By saving the values that we compute in an array external to the recursive procedure, we explicitly avoid any recomputation. This program computes ![]() time used by Program 1.

time used by Program 1. Figure 2 Top-down dynamic programming for computing Fibonacci numbers

Figure 2 Top-down dynamic programming for computing Fibonacci numbers

This picture of the recursive calls used to compute F(8) by the top-down dynamic programming implementation of the recursive algorithm illustrates how saving computed values cuts the cost from exponential (see Figure 1) to linear.

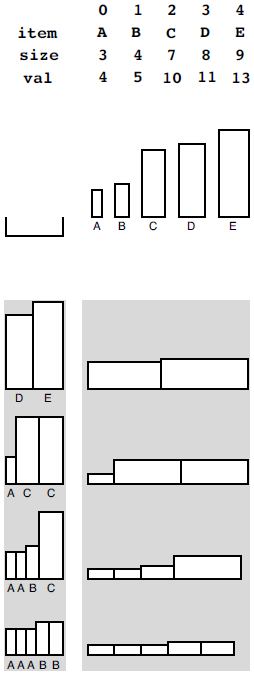

For a more complicated example, consider the knapsack problem: A thief robbing a safe finds it filled with N types of items of varying size and value, but has only a small knapsack of capacity M to use to carry the goods. The knapsack problem is to find the combination of items which the thief should choose for the knapsack in order to maximize the total value of all the stolen items. For example, with the item types depicted in Figure 3, a thief with a knapsack of size 17 can take five A’s (but not six) for a total take of 20, or a D and an E for a total take of 24, or one of many other combinations. Our goal is to find an efficient algorithm that somehow finds the maximum among all the possibilities, given any set of items and knapsack capacity.

Figure 3 Knapsack example

An instance of the knapsack problem (top) consists of a knapsack capacity and a set of items of varying size (horizontal dimension) and value (vertical dimension). This figure shows four different ways to fill a knapsack of size 17, two of which lead to the highest possible total value of 24.

There are many applications in which solutions to the knapsack problem are important. For example, a shipping company might wish to know the best way to load a truck or cargo plane with items for shipment. In such applications, other variants to the problem might arise as well: for example, there might be a limited number of each kind of item available, or there might be two trucks. Many such variants can be handled with the same approach that we are about to examine for solving the basic problem just stated; others turn out to be much more difficult. There is a fine line between feasible and infeasible problems of this type.

In a recursive solution to the knapsack problem, each time that we choose an item, we assume that we can (recursively) find an optimal way to pack the rest of the knapsack. For a knapsack of size cap, we determine, for each item i among the available item types, what total value we could carry by placing i in the knapsack with an optimal packing of other items around it. The optimal packing is simply the one we have discovered (or will discover) for the smaller knapsack of size cap-item[ i ].size. This solution exploits the principle that optimal decisions, once made, do not need to be changed. Once we know how to pack knapsacks of smaller capacities with optimal sets of items, we do not need to reexamine those problems, regardless of what the next items are.

Program 3 is a direct recursive solution based on this discussion. Again, this program is not feasible for use in solving actual problems, because it takes exponential time due to massive recomputation (see Figure 4), but we can automatically apply top-down dynamic programming to eliminate this problem, as shown in Program 4. As before, this technique eliminates all recomputation, as shown in Figure 5.

Program 3 Knapsack problem (recursive implementation)

As we warned about the recursive solution to the problem of computing the Fibonacci numbers, do not use this program, because it will take exponential time and therefore may not ever run to completion even for small problems. It does, however, represent a compact solution that we can improve easily (see Program 4). This code assumes that items are structures with a size and a value, defined with![]() and that we have an array of N items of type Item. For each possible item, we calculate (recursively) the maximum value that we could achieve by including that item, then take the maximum of all those values.

and that we have an array of N items of type Item. For each possible item, we calculate (recursively) the maximum value that we could achieve by including that item, then take the maximum of all those values. Figure 4 Recursive structure of knapsack algorithm

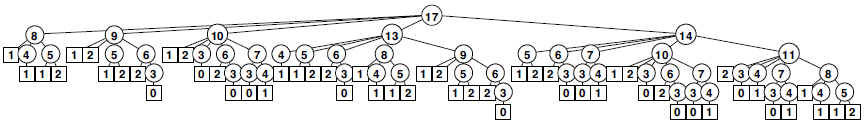

Figure 4 Recursive structure of knapsack algorithm

This tree represents the recursive call structure of the simple recursive knapsack algorithm in Program 3. The number in each node represents the remaining capacity in the knapsack. The algorithm suffers the same basic problem of exponential performance due to massive recomputation for overlapping subproblems that we considered in computing Fibonacci numbers (see Figure 1).

Program 4 Knapsack problem (dynamic programming)

This mechanical modification to the code of Program 3 reduces the running time from exponential to linear. We simply save any function values that we compute, then retrieve any saved values whenever we need them (using a sentinel value to represent unknown values), rather than making recursive calls. We save the index of the item, so that we can reconstruct the contents of the knapsack after the computation, if we wish: itemKnown[M] is in the knapsack, the remaining contents are the same as for the optimal knapsack of size M-itemKnown[M].size so itemKnown[M-items[M].size] is in the knapsack, and so forth. Figure 5 Top-down dynamic programming for knapsack algorithm

Figure 5 Top-down dynamic programming for knapsack algorithm

As it did for the Fibonacci numbers computation, the technique of saving known values reduces the cost of the knapsack algorithm from exponential (see Figure 4) to linear.

By design, dynamic programming eliminates all recomputation in any recursive program, subject only to the condition that we can afford to save the values of the function for arguments smaller than the call in question.

Property 1 Dynamic programming reduces the running time of a recursive function to be at most the time required to evaluate the function for all arguments less than or equal to the given argument, treating the cost of a recursive call as constant.

For the knapsack problem, this property implies that the running time is proportional to NM. Thus, we can solve the knapsack problem easily when the capacity is not huge; for huge capacities, the time and space requirements may be prohibitively large.

Bottom-up dynamic programming applies to the knapsack problem, as well. Indeed, we can use the bottom-up approach any time that we use the top-down approach, although we need to take care to ensure that we compute the function values in an appropriate order, so that each value that we need has been computed when we need it. For functions with single integer arguments such as the two that we have considered, we simply proceed in increasing order of the argument; for more complicated recursive functions, determining a proper order can be a challenge.

For example, we do not need to restrict ourselves to recursive functions with single integer arguments. When we have a function with multiple integer arguments, we can save solutions to smaller subproblems in multidimensional arrays, one for each argument. Other situations involve no integer arguments at all, but rather use an abstract discrete problem formulation that allows us to decompose problems into smaller ones.

In top-down dynamic programming, we save known values; in bottom-up dynamic programming, we precompute them. We generally prefer top-down to bottom-up dynamic programming, because

- It is a mechanical transformation of a natural problem solution.

- The order of computing the subproblems takes care of itself.

- We may not need to compute answers to all the subproblems.

Dynamic-programming applications differ in the nature of the subproblems and in the amount of information that we need to save regarding the subproblems.

A crucial point that we cannot overlook is that dynamic programming becomes ineffective when the number of possible function values that we might need is so high that we cannot afford to save (top-down) or precompute (bottom-up) all of them. For example, if M and the item size are 64-bit quantities or floating-point numbers in the knapsack problem, we will not be able to save values by indexing into an array. This distinction causes more than a minor annoyance—it poses a fundamental difficult. No good solution is known for such problems;

Dynamic programming is an algorithm-design technique that is primarily suited for the advanced problems of the type that we shall consider in series 2 through 8. Most o the algorithms that we discuss in series 2 through 4 are divide-and-conquer methods with nonoverlapping subproblems, and we are focusing on subquadratic or sublinear, rather than subexponential, performance. However, top-down dynamic programming is a basic technique for developing efficient implementations of recursive algorithms that belongs in the toolbox of anyone engaged in algorithm design and implementation.